Gary Illyes just published this blog post asking webmasters and developers to adopt an efficient caching policy. This is generally a win-win situation for sites that don’t change content often. Your server will thank you!

https://developers.google.com/search/blog/2024/12/crawling-december-caching

What does the blog say?

The source explains that Google’s crawlers support two methods for determining if content on a website has changed: ETag and Last-Modified. These methods help Google decide whether to use a cached copy of a page or download a fresh one. Using these methods can make a site load faster and more efficiently for users.

ETag

ETag is a unique identifier, like a fingerprint, assigned to the content of a webpage. When a crawler first visits a page, it saves the ETag value. On subsequent visits, the crawler sends the saved ETag value back to the server in a request header called If-None-Match. The server compares the incoming ETag to the current ETag for the page.

- If the ETags match, the server sends back a response code of “304 Not Modified” without the page content. The crawler, or the user’s browser, then uses its locally cached version of the page.

- If the ETags don’t match, meaning the content has changed, the server sends back the new content along with a new ETag.

Last-Modified / If-Modified-Since

Last-Modified works similarly to ETag, but instead of a unique identifier, it uses the date and time the page was last modified. The crawler saves the Last-Modified date and, on subsequent visits, sends it back to the server in a request header called If-Modified-Since.

- If the Last-Modified date on the server matches the one sent by the crawler, the server sends back “304 Not Modified” without the content. The crawler uses its cached copy.

- If the Last-Modified dates don’t match, the server sends the new content along with a new Last-Modified date.

Recommendations from Google

The source makes the following recommendations:

- Use ETag whenever possible as it’s less prone to errors.

- Use both ETag and Last-Modified if you can.

- Only requires a cache refresh when there are significant changes to the content. Minor changes, like updating a copyright date, don’t necessitate a refresh.

- Format the Last-Modified date correctly according to the HTTP standard.

- Consider setting the max-age field in the Cache-Control header to guide crawlers on how long to keep a page cached.

The source emphasizes that using these methods benefits both users, by making pages load faster, and website owners, by potentially reducing hosting costs.

Prerequisites:

You can read about these concepts as SEOs to help your conversation with your web team!

- CDN hit or Miss ratio

- Cache budget

- Origin locations

- Eviction policy

- 304 vs 200 – Good to also know what % of the crawls were 304 vs 200

Our Experience

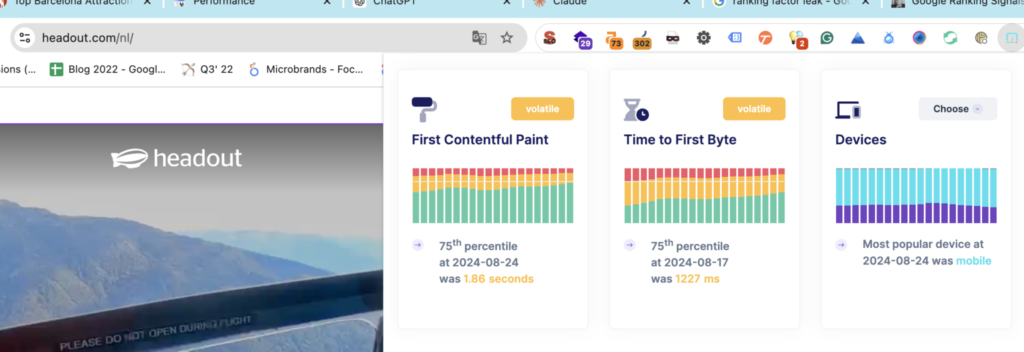

The platform and SEO team at www.headout.com spent some time in 2022 figuring out that despite having a CDN we had poor page speed.

Only while digging around real-time logs, we found that most Googlebot crawls were a miss. i.e. Gbot didn’t get the cached page from the CDN in the US (it crawls from the US) but had to frequently hit our origin server and that means terrible TTFBs and subsequent page load.

TTFB was 0.8s on a CDN hit and 2.2s on a CDN miss.

– New Relic

This brings us to the takeaways:

Any teams looking to set their caching policy should consider the following factors:

- CDN budget & Eviction Policy – If you have a large site, good chance your CDN will NOT store all of your URLs. Most CDN providers follow a LRU (least recently used) eviction policy. If traffic on your site is highly skewed to a couple of pages, they almost always will be served from CDN but lesser popular pages will see poor page speed despite being on the same infra

- CDN warming – Continuation of the point above, if your TTL is 24 hours, you can run a CRON to warm your CDN to serve hits

- Cache Strategy: We finally settled on a stale-while-revalidate policy as best suited for our needs. This strategy allows a client (like a browser) to use a cached version of a resource even if it is “stale” (past its expiration date), while simultaneously initiating a background request to validate the resource and fetch a fresh copy if needed. This approach helps improve perceived performance by quickly serving stale content while ensuring the user eventually receives the updated version.

- Type of content: Your site can have different page types and templates and each should have a tailored cache policy:

- Live blog – Almost no cache + calls to G every time an update is pushed (Use no-cache/no-store policies)

- Realtime pricing (e.g. gold rates) – Should be approximately as close to the time of change of content. In this case, every 24 hours?

- Static blog – almost as much as you can (use max-age)

For more details, you can read this more detailed guide.

How SEOs Should Work With Platform Teams

When we overcome procrastination, we shall publish a detailed guide on headout backstage. Till then, you can reach out to me on Twitter and Linkedin